Bellman Optimality Equation

- Markov Process

- Markov Reward Process

- Bellman Expectation Equation

- > Bellman Optimality Equation

- RL Overview

- General Policy Iteration (GPI)

- Monte Carlo Method Of Finding Values

- TD Method Of Finding Values

- Markov Decision Process

The bellman optimality equation tells you what the optimal value is for a particular state, given that you know the optimal value for the neighboring states.

The optimal value function tells you that if you start in a particular state, and you behave optimally, what is the return that you’ll get?

You don’t need to know a policy to find the optimal value function. You can use the value iteration algorithm.

By neighboring states, I mean states that are immediately reachable from a particular state (via actions).

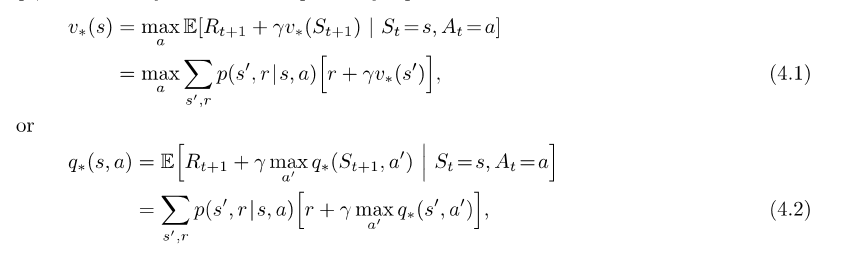

Here is bellman’s optimality equation (taken from Sutton’s RL book), for now just look at the top one:

- to find the optimal value for state s

- for each action available from state s

- find expected return if you take that action (explained shortly)

- optimal value for state s is simply the max of all the expected returns of the various actions

The expected return for taking action a is simply that, “on average” what return can I expect for taking this path? Start at action a, look at all the subsequent states/rewards you could possible end up in from action a, and what the probability of ending up there is. Remember, expectation is just probability * value, summed over all possible values. So the expected return for an action is the probability of a taking a certain path (i.e. ending up in a certain subsequent state and getting a certain reward) times the value for that path, summed up over all possible paths!

To find the expected return for taking an action a

- set action_expectation = 0

- for each (subsequent_state,reward) pair that could occur by taking this action (“for each possible path”)

- action_expectation += probability of ending up in (subsequent_state,reward) * (reward + discount_factor * value(subsequent_state))

Note:

- you can make an initial guess for the optimal values for all the states in your MDP (literally any guess is ok, I just use all 0’s)

- then apply the bellman optimality equation on each state to improve the guess for that state

- so now you have better guesses for each state of your MDP

- you can apply the bellman optimality equation once more on each state to further improve the guesses

- you can keep on repeating this, until applications of the bellman optimality equation result in only minor changes to your values (“terminating condition”)

- this iterative approach to improving your guesses for the optimal values, is called value iteration

- arbitrarily initialize values for each state (they can literally be anything, i use 0’s)

- while true

- for each state

- use bellman’s optimality equation to update the value

- terminating condition: keep doing this until you notice that the value for your states aren’t changing by much (some threshold)

A nice little one sentence summary of value iteration is

Start with arbitrary values, iteratively apply bellman’s optimality equations to improve values until they seem to stop changing by much.

The second equation (equation 4.2) is looking at the same thing really, but from an action perspective. Spend a few minutes to put the equation into words and you’ll get it!